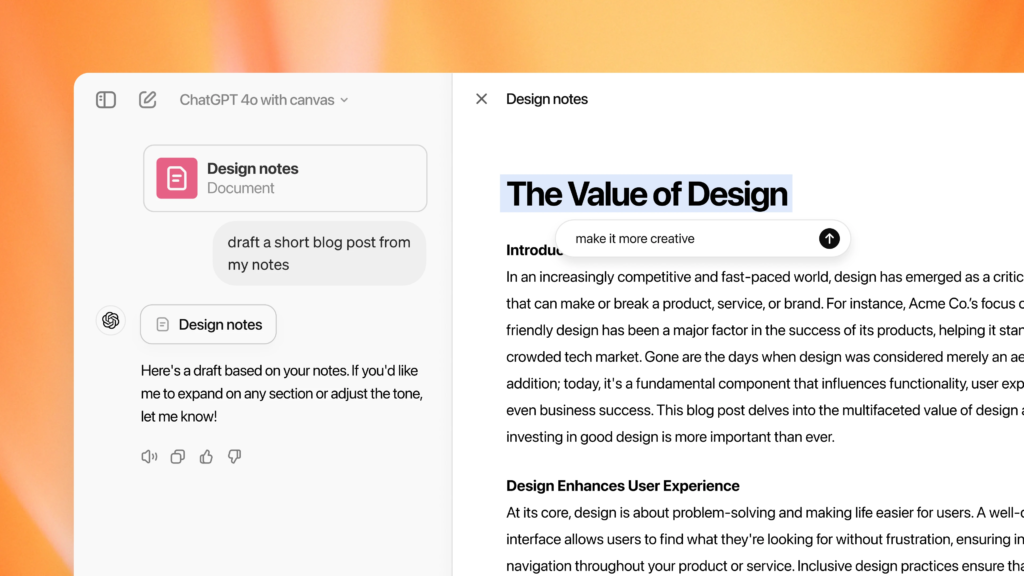

Open AI this week released a new incremental update. A new way to interact with GPT-4 through a component called “canvas”. Now it becomes possible to work on an artifact, like an email or a piece of code, iteratively, simply asking the model to apply modifications or integration.

https://openai.com/index/introducing-canvas

We will not need anymore to have a long conversation about our code or email, we will see the code or the email taking step by step its final form. This is very convenient and the first step in a long awaited direction that has been already anticipated by others in the industry, for example Cursor or Antrhopic for coding.

This, combined with emerging capabilities of the new voice models, is showing that the technology, already in its current form, may allow to rethink in a profound way the way we interact with our digital interfaces. Even without further improvements by the current technology (that of course will improve), this is opening massive opportunities both in the professional and consumer space for new and improved experiences.

Microsoft has introduced this technology very early in Github with copilot and then extended the copilot experience to almost its entire suite of products, thus still missing the interactive experience offered by Open AI with its minimal interface with Canvas.

Apple announced Apple Intelligence, but still missing the big expectations that users had about being able to use their device in a truly AI empowered machine.

Also Amazon, despite having pioneered with Alexa the digital assistant market, seems still lacking behind the true innovation that would seem to be at reach and somehow already expected by its users.

In general, it seems that a lot of potential of employing these technologies is already available, and despite some lags, and some false start, we are truly ready to experience a profound transformation in the way we will use the technology around us in the very near future.