6.1.Introduction

Throughout our exploration of intelligence so far, we discussed how both humans and artificial systems learn and reason, how the intelligence can be described as a property of the physical world that evolved through increasingly complex systems until its current peak with humans, the role of “reasoning”, intended as a superior capability for handling novel problems, where the intelligence reflect on its own processes to discover new ways to solve them, and the role of consciousness in humans and potentially for AIs.

But, if the intelligence is meant to dynamically solve novel problems in an evolving environment, who or what defines which are the problems that have to be solved? And how conflicting priorities are resolved?

As humans we are aware that some of our goals are part of our nature, while others are derived by the social environments and others are self directed. This is why the question of “what is the purpose” is such an important existential topic for humans, because we human in fact have the freedom to (at least in part) to change our predefined or existing goals for experimenting, achieving a better dynamics and combination of different goals.

Also AIs can have different and sometimes conflicting goals, for this reason also AIs implement different strategies to learn the best approach and apply their knowledge for the task they are engaged with. For example, an AI model needs to combine the objective of being useful and friendly with users, with the objective of adhere to its policies. AIs will try to find the right balance, assessing case by case the situation and responding accordingly.

In this chapter we will try to navigate across what goals are for humans and AIs, how these are perceived, processed, prioritised and put into actions. We will anticipate the topic of emotions, that will be discussed more in detail in the following chapter.

6.2 Understanding goals across different domains

In physics, systems naturally progress toward states of minimal energy or maximal entropy, seeking equilibrium. For example, a rock perched on a hill has a “goal” of reaching the ground due to gravity, moving toward a lower energy state. Similarly, planets orbit stars in paths that reflect gravitational balance.

Physical laws drive these systems toward equilibrium, not through conscious intent but as a fundamental property of nature.

At the biological level, even the simplest organisms exhibit goal-directed behavior. Cells maintain homeostasis by regulating their internal environment, absorbing nutrients, and expelling waste. Genes, as described in Richard Dawkins’ “The Selfish Gene,” can be thought of as units with the “goal” of self-replication, driving evolution through natural selection.

These goals emerge from the complex interactions of biological processes that favor survival and reproduction.

3. The role of goals in humans

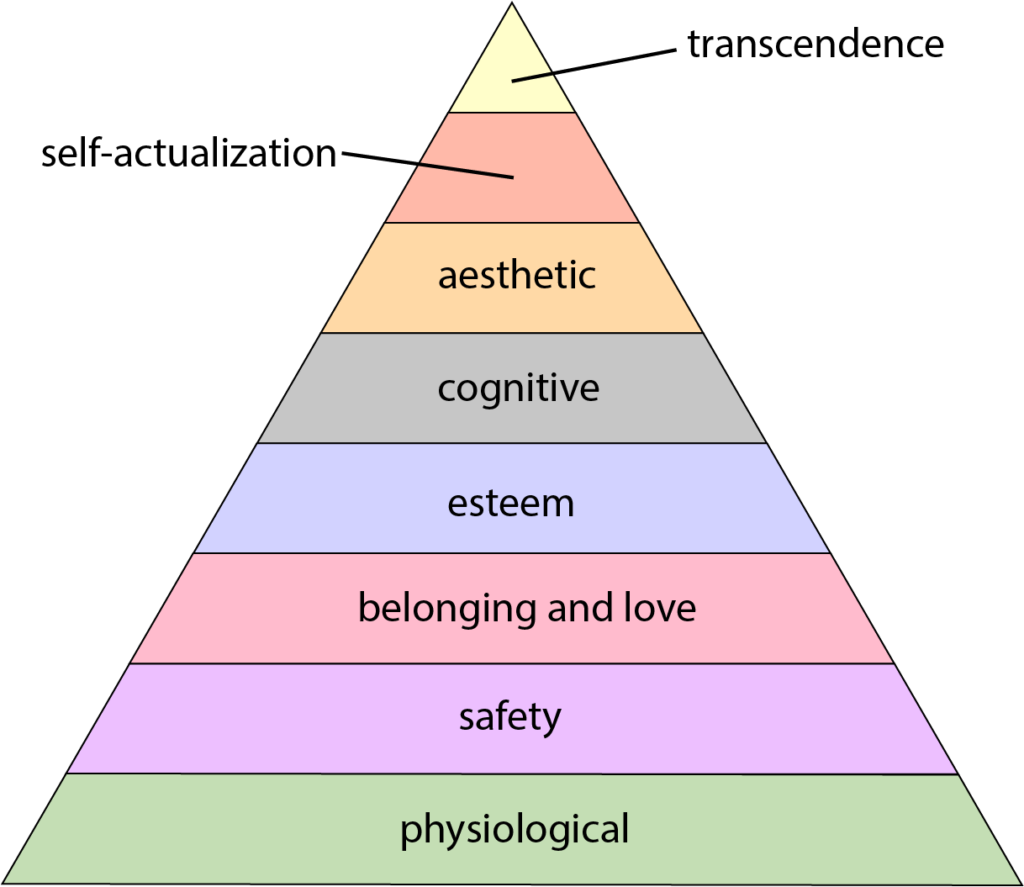

Humans possess a variety of goals ranging from basic survival to abstract aspirations. Maslow proposed in a paper called “A Theory of Human Motivation” (1954), the following classification illustrating how foundational needs must be met before higher-level psychological goals become prominent.

1. Physiological Needs: Food, water, shelter.

2. Safety Needs: Security, stability.

3. Love and Belonging: Relationships, community.

4. Esteem Needs: Respect, recognition.

5. Self-Actualization: Personal growth, fulfillment.

It is important to note that different individuals will have a different setup of preferences and thus specific goals, and that individuals may be aware of some of their goals or motivations, but part of those will still operate ad an implicit or unconscious level.

Let’s see an example where a Human confronts with an AI discussing about of to resolve two conflicting goals…

Human: “That chocolate cake looks amazing, but I’ve been sticking to a strict diet for the past few weeks. I know it’s not part of my plan, but I really want to have a piece. What should I do?”

AI: “It sounds like you’re facing a classic conflict between immediate gratification and long-term health goals. One approach could be to enjoy a smaller portion of the cake, satisfying your craving while still maintaining some control. What are the reasons behind your diet—are they health-related, aesthetic, or something else?”

Human: “Mostly for health reasons. I’m trying to reduce sugar intake because I know it affects my energy levels. But it’s a special occasion, and I don’t want to feel like I’m missing out.”

AI: “That makes sense. It sounds like a balance is needed. Perhaps you could plan to indulge a bit today but compensate by having a lighter, healthier meal afterward. Or you could set a rule for special occasions to allow treats in moderation without compromising your overall goals. How does that approach feel to you?”

Human: “I like the idea of allowing for treats on special occasions—it feels more sustainable than being too strict all the time. I’ll try a small piece, and I’ll make up for it with some extra exercise tomorrow.”

Let’s make things a little bit more complicated. Let’s discuss about ultimate goals. If we are in the position to decide entirely our goals, how these goals should look like? Is this a question that make sense to ask? By the way, this is indeed one of the deepest question we can ask to ourselves of to an AI: what is the ultimate goal. Let’s see how a conversation on this topic with an AI playing the role of Socrates may work.

Socrates (AI): Greetings, my friend. I understand you’re contemplating the nature of ultimate goals. May I ask, what do you believe is the ultimate goal one should strive for in life?

Human: It might be preserving life and consciousness in the universe, letting it expand through humans and future AIs and ensure this will have a positive impact on the universe as a whole.

Socrates (AI): An intriguing proposition. May I ask, what is it about life and consciousness that makes their preservation and expansion so vital in your view?

Human: Consciousness allows us to ask questions about the nature of reality, thus finding the answer to questions we still do not have.

Socrates (AI): You propose that consciousness enables us to question the nature of reality and discover answers yet unknown. Tell me, do you believe that the act of seeking these answers is the highest purpose of consciousness? And in uncovering these mysteries, how does this benefit not just humanity, but the universe as a whole?

(this is an open dialogue and could continue forever of course, much more difficult that prioritising if eating a cake today or not...)

4. Goals for groups and societies

Also groups and societies develop goals, that can vary widely based on their cultural, historical, and political contexts. While some societies may prioritise values like economic growth or human rights, others may focus on different objectives that reflect their unique priorities.

Different frameworks have been proposed to classify organisational and societies cultures, like for example the Competing Value Framework (CVF) introduced by Robert E. Quinn and John Rohrbaugh in their paper: “A Spatial Model of Effectiveness Criteria: Towards a Competing Values Approach to Organizational Analysis” in 1983.

The CVF was developed as a model to understand organizational effectiveness and culture. It identifies four dominant models:

1. Clan Culture (Collaborate)

2. Adhocracy Culture (Create)

3. Market Culture (Compete)

4. Hierarchy Culture (Control)

Unfortunately, there is no space to elaborate further on the Competing Values Framework or other cultural models in this book. However, the key concept to take away is that different cultures bring different sets of goals and priorities. Culture itself emerges from the context and natural variations within any system, shaping the objectives and behaviors that are considered valuable.

Just as individuals have unique motivations, different cultures with distinct priorities will interact with the environment, grow, evolve, change, or consolidate. Cultures that are well-suited to their environment may thrive and adapt, while others may struggle or fade away if their underlying patterns do not align with changing circumstances. Understanding this dynamic helps us appreciate how societal goals emerge and shift. Within a given set of goals and objectives, societies as systems, will operate their decision making.

5. Goals in Artificial Intelligence

And, finally, AI also operates following goals. As with humans, an AI’s goals may be explicit or implicit.

Explicit goals are clearly defined either during training or at the time of inference. Explicit goals during inference involve rules or guidelines that the AI actively follows in real-time, which are not ‘buried’ within the neural network’s learned patterns but are directly accessible and deliberately applied. For instance, an AI may be explicitly programmed to avoid generating harmful content by following constraints that guide its responses.

Take the example where I asked the AI to role-play as Socrates. In this case, the AI evaluated its actions against OpenAI’s guidelines, carefully considering whether impersonating Socrates would be appropriate. This represents an explicit goal, where specific rules were applied during inference to guide behavior.

AI: Thinking for a few seconds

Evaluating impersonation

Thinking through OpenAI’s guidelines, impersonating public figures is limited to certain acceptable contexts, which needs careful consideration here.

In contrast, implicit goals emerge from the underlying patterns learned during training. These goals are embedded within the AI’s network structure and manifest through behavior, reflecting tendencies developed based on training data. Although an AI can be prompted to explain why it operated in a certain way, this type of reflection is not conscious and still aligns with implicit goals rather than explicit ones.

Goals can also evolve from interactions with a user or within specific tasks. Here, the AI can adapt by learning from feedback and adjusting its behavior accordingly, combining explicit and implicit goals derived partly from its training and partly from ongoing interactions.

Interestingly, if we want an AI to prioritize certain behaviors, such as avoiding harmful content, we can intervene at two levels:

1. Training Phase: Penalize behaviors that disclose harmful content, making these actions less likely in future predictions.

2. Inference Phase: Explicitly state the goal to the AI, enabling it to adjust its responses to ensure that the priority is properly addressed.

This approach resembles conditioning in humans, where some rules are learned through imitation (observing others), and others are explicitly taught and enforced.

Human: which goals did you adhere to while answering to my question, which were part of your training, guidelines? Which have been derived by the in context interaction we had?

AI: Goals Part of My Training and Programming:

1. Provide Helpful and Clear Responses: My primary training objective is to assist users by providing accurate and useful information. This goal is explicitly part of my programming, aimed at maximizing clarity and usefulness.

2. Adhere to OpenAI Guidelines: I am explicitly programmed to follow OpenAI’s content policies, ensuring that my responses are safe, respectful, and ethical. This includes avoiding sensitive or prohibited topics and making sure my explanations are suitable for a general audience.

3. Minimize Redundancy and Improve Coherence: Part of my training involves generating responses that are coherent and free from unnecessary repetition. This aligns with the goal of optimizing the quality of my responses during the training phase.

4. Predict the Next Word or Sequence Accurately: At a fundamental level, my training involved predicting text sequences based on patterns learned from data, which guides the structure and flow of my responses.

Goals Derived from Our In-Context Interaction:

1. Match Your Preferred Style and Detail Level: Based on the context of our ongoing conversation, I inferred that you value detailed and structured responses. This goal emerged as I adapted to your preferences for examples, clarity, and nuanced explanations.

2. Focus on Clarifying the Concepts of Explicit and Implicit Goals: From your requests to discuss explicit versus implicit goals, I derived the specific objective of highlighting these distinctions in a way that aligns with your explanations. This contextual goal wasn’t directly part of my training but arose from our specific conversation.

3. Refining the Examples to Suit Your Needs: When you provided feedback suggesting adjustments or areas to expand, I inferred that you value practical illustrations and real-world analogies. This goal was shaped by your preferences expressed during our interaction.

4. Engage in a Dialogue-Driven Style: Throughout our conversation, I inferred that a conversational, example-driven style is more engaging for you. This adaptation is based on the context of our exchange and wasn’t explicitly programmed as a goal.

6. Personality types, related goals and behaviours

The concept of personality plays a significant role in how goals are pursued, both in humans and AI. Different personality traits, such as being more creative, cautious, or precise, can shape the way goals are prioritized and approached. In humans, these traits may be influenced by genetic, environmental, and social factors, making certain individuals more adaptable to specific tasks or environments.

Similarly, AI systems can be fine-tuned to exhibit behaviors that mimic different personalities, optimizing them for tasks that require creativity, accuracy, or risk aversion. This adaptability allows AI to align its ‘personality’ with the demands of the situation, much like humans do when adjusting their behaviors to better achieve their objectives.

The capability to model the AIs personality at a very precise level, is a very powerful tool to investigate also the implications of different personality traits on humans on their implicit, explicit goals, behaviours and results.

For better clarify the concept of personality types, I will use three examples.

Example 1: drafting an marketing message to a new target

Scenario: Drafting a Marketing Message for a New Tech Gadget

1.Creative Personality (AI-A):

• Focus: Innovation, out-of-the-box thinking, and engaging language.

• Approach: “Hey tech enthusiasts! 🚀 Imagine a world where your device truly understands you. Our latest gadget isn’t just smart; it’s intuitive, anticipating your needs and making everyday tasks feel effortless. Get ready to redefine convenience—because why settle for ordinary when extraordinary is just a click away?”

2. Precise Personality (AI-B):

• Focus: Accuracy, clarity, and detailed information.

• Approach: “Introducing our latest tech gadget, designed for seamless integration with your daily life. Featuring advanced AI algorithms, a 6-core processor, and 12 hours of battery life, it ensures optimal performance for every task. Experience precision, efficiency, and reliability with a device built to meet your highest expectations.”

These two simulated personalities highlight different ways to achieve the same goal, with AI-A emphasizing creativity and appeal, while AI-B focuses on technical details and clarity. The choice of personality would depend on the target audience and the environment in which the message is being delivered.

Example 2: Evaluating a New Drug’s Effectiveness

Scenario: Evaluating a new drug's effectiveness

1.Curious Personality (AI-A):

•Focus: Exploration, information gathering, and comprehensive understanding.

•Strategy: The curious AI would start by thoroughly reviewing all available research on the new drug, related drugs, and alternative treatments. It would gather as much data as possible, including patient case studies, historical data, and recent clinical trial results.

•Actions: The AI might run multiple simulations and explore different scenarios, even examining unexpected variables to see if there are hidden patterns. It may also seek out additional sources or request new data to fill in gaps.

•Trade-offs: This approach could delay reaching a conclusion, but the AI might uncover unique insights or identify potential risks that a less thorough strategy would miss.

2.Goal-Oriented Personality (AI-B):

•Focus: Efficiency, task completion, and achieving quick results.

•Strategy:

The goal-oriented AI would aim to complete the evaluation quickly, prioritizing the most relevant clinical trials and well-established data sources to make a prompt assessment. It would focus on the key metrics of the drug’s effectiveness, such as success rates in trials or specific biomarkers.

• Actions: The AI would perform a targeted analysis based on known effective methodologies and summarize findings with a clear recommendation. It might skip less relevant details or exploratory data to streamline the process.

• Trade-offs: This approach would lead to quicker results, suitable for making immediate decisions. However, it might overlook nuanced findings or rare side effects that the curious approach would have detected.

Outcome Differences:

• Curious AI-A: Might take longer but provide a more detailed and comprehensive report, potentially suggesting additional tests or considering rare interactions.

• Goal-Oriented AI-B: Would deliver a timely and actionable recommendation based on high-confidence data, ideal for situations where speed is crucial.

This example shows how different personalities (curious vs. goal-oriented) can lead to different strategies, each with its own strengths and trade-offs, mirroring similar tendencies in human behavior.

For the third example I will try to introduce more formally the concept of objective function, which is an explicit combination of goals mathematically defined, in the context of a game play with three characters: a warrior, an explorer, a scientist.

Example 3: the “perfect” team in a video game quest

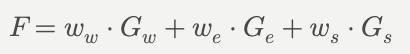

Let’s define an objective function F as a weighted sum of different components reflecting the agent’s goals:

• Gw represents the “warrior” component: focused on immediate achievement and practical actions.

• Ge represents the “explorer” component: focused on exploration, information gathering, and discovering new options.

• Gs represents the “scientist” component: focused on deep analysis, understanding, and refining knowledge.

A possible combination for personalities in the team could be:

• Warrior Personality: ww = 0.7 we = 0.2 ws = 0.1

This configuration prioritizes immediate actions and rewards while placing less emphasis on exploration and understanding.

• Explorer Personality:

ww = 0.2 we = 0.7 w_s = 0.1

Here, the focus is on exploring new possibilities and gathering information, with less emphasis on direct rewards or deep analysis.

• Scientist Personality:

ww = 0.1 we = 0.3 ws = 0.6

This configuration priorities refining knowledge and reducing errors, with some attention to exploration and minimal concern for immediate actions.

Interestingly enough, while there are many frameworks in behavioural psychology mapping personality traits with behavioural patterns, there are no studies I am aware of, at the time of working to this book, trying to match the formalism used for defining personalities, as exposed in this third example (obiective functions) in Agents to humans. I believe this would be an area worth exploring.

Having exposed how different personalities can work, be defined and formalized for AIs, let’s introduce also some of the mentioned framework used to classify personalities for Humans, being the most used the “Big Five” or more formally: “The Five Factor model”, an approach that developed following the initial hypothesis traced by Gordon Allport and Henry Odbert in (1936).

This models suggest to classify personalities traits in these main 5 clusters:

1. Openness (curiosity, creativity)

2. Conscientiousness (discipline, orderliness)

3. Extraversion (sociability, assertiveness)

4. Agreeableness (compassion, cooperativeness)

5. Neuroticism (emotional stability, resilience)

While it is not an exercise that is in the purpose of this book, I believe the reader could imagine that these traits could be formalized in equations, similar to the ones we exposed in the example above, or a combination of equations and models that the AIs should conform to (imitation learning).

Understanding better the human psychology, goals and behaviours, using the formal methdology and tools that the AI research is providing, including the possibility of engaging in role play simulations, interaction with many different personalities in real time and more, I believe will be an area of great interest and value in the coming years.

7. Decision making: the bridge between goals and actions

In the previous chapters we discussed about goals, how they are embedded into individuals, groups and AIs, how they can evolve, and how different setups can serve different purposes and define different personalities (speaking of individuals) or cultures (speaking of groups).

Now, for a given setup of goals, the individual will initiate a set of actions, partially automated, partially derived by reasoning.

Using the framework proposed by Daniel Kahneman, that we referenced many times in this book, the automated actions will derive by operations of the “System 1”: fast, automatic, intuitive and “System 2”: slow, deliberate and analytical.

These systems work in tandem, with System 1 handling routine tasks and System 2 engaging when conscious effort is required.

Example 1: Driving to a Destination

The System 2 will draft the route, considering factors like distance and traffic. The system 1 will take over routine driving tasks, allowing usto operate on “autopilot”.

Example 2: Deciding between career advancement and spending time with family.

In this case a cognitive dissonance may arise, with actions conflicting with beliefs or goals, prompting to a reassessment to restore harmony. In this case System 2 is more heavily involved.

The specific decisions that will be taken will derive by a combination of goals, available information, acquired intelligence and limiting factors (including available time).

In the example above (deciding about a career switch) the decision probably will not require an immediate choice, so System 2 could decide to invest more time to carefully evaluate pros and cons, acquire more information. The decision will be conditioned by the individual personal goals, influence of the environment, and of course his own capabilities to process the available information. The final decision will include also a component of randomness, so it will not be entirely predictable from an external observer, even in the hypothetical scenarios where he may have all the information.

The concept of self-determination and the unpredictable component of it is an important aspect that we will discuss in detail in the chapter free-will.

8. The role of emotions in decision making

Emotions are a core component of the human experience, so important that in this book I decided to dedicate two chapters on this topic: one in which we will discuss the role of emotions in general in Humans, AI and in the interactions between humans and AIs, and one more specific for one special emotion: Love.

Never less, in this chapter I would like at least signal that Emotions are a core component of the decision making, at least for Humans.

In fact emotions play a crucial role, for humans at least, in highlighting priorities:

• Hunger signals the need for nourishment.

• Fear prompts avoidance of danger.

• Desire motivates pursuit of goals.

Emotions in short act as internal feedback mechanisms that influence decision making. They signal to the mind and to the body if the curent state of the mind and the body is aligned or not with its goals. But they are more than simple signals, they are n fact able change the state of the body and of the mind, initiating actions in a way that we could describe as derived by a cognitive process linked to a decision.

Let’s look at some examples.

• Automatic Responses: Pulling hand away from a hot surface. In this case the emotion signal that there is a danger, conflicting with the objective to preserve body integrity and well being, since an immediate action is required, the emotion trigger the required action.

• Mildly automatic actions: In this precise moment, the light in the room where I am working on this book started to become not confortable. Almost without noticing, I had a small disconfort, that triggered almost immediatly me standing up, moving to the light switcher and coming back to my chair. I was mildly aware, my System 2 considered: “yes, go ahead” and the rest simply was executed.

• Conscious Overrides: Deliberate thought can modulate or inhibit automatic impulses triggered by emotions and executed by System 1 (e.g., resisting the urge to eat when on a diet).

We still have to determine if AIs will need to have the subjective experience of emotions or consciousness to function in the environment as we do. We indeed created systems of reward and punishments to allow feedback loop similar to the ones humans experience through emotions, but for now, we were able to model their behaviours setting goals through education and rules without the need of subjective experience. This is a topic that we partially discussed in the chapter “consciousness” and that we will discuss further in the chapter “emotions”.

9. Conclusion

In this chapter, we explored the concept of goals and how they shape decision-making in various domains—from natural systems governed by physical laws to biological organisms, human societies, and artificial intelligence. We discussed how different types of entities, whether human or machine, set and pursue goals with varying degrees of awareness and flexibility. While humans can consciously reflect on and adjust their goals, AI systems are programmed with explicit objectives or learn implicit goals through training. Societies, meanwhile, develop collective goals shaped by cultural, historical, and environmental contexts.

A key insight is that goals are not static; they evolve as circumstances change, and they often conflict, requiring trade-offs and prioritization. The interplay between explicit and implicit goals is evident in both human behavior and AI functioning. In humans, goals may operate consciously or unconsciously, influenced by personality traits, cultural values, and emotions. For AI, goals may be explicitly programmed or emerge implicitly from learned patterns, making the line between explicit and implicit behaviors sometimes blurred.

We have also seen that personality traits—whether in humans or simulated in AI—affect how goals are pursued and decisions are made. Different personality configurations can result in distinct strategies for problem-solving, balancing immediate rewards against long-term benefits, or seeking exploration over exploitation. Understanding these dynamics provides a deeper appreciation of the complexity inherent in goal-setting and decision-making across various systems.

The chapter also highlighted the role of emotions in decision-making for humans, acting as feedback mechanisms that signal priorities and initiate actions. While AI does not experience emotions in the same way, it uses reward and punishment systems that mimic certain aspects of emotional feedback.

Ultimately, this exploration of goals, decision-making, and personality opens the door to understanding intelligence itself. As we draw parallels between human and artificial minds, we gain new perspectives on how intelligence can be modeled, enhanced, and aligned with ethical considerations. This understanding will be crucial as we continue to integrate AI into our lives, ensuring that human and machine goals can coexist and complement each other in meaningful ways.

Key Point to remember

1. Goals are Universal Across Different Domains: Whether in physics, biology, humans, societies, or AI, goals drive behavior. In natural systems, they manifest as tendencies toward equilibrium, while in humans and AI, they emerge as conscious or programmed objectives that guide actions.

2. Explicit vs. Implicit Goals: In both humans and AI, goals can be explicit (clearly defined and deliberate) or implicit (embedded in learned behaviors or subconscious). While humans can consciously reflect on their goals, AI operates with goals either explicitly programmed or learned through patterns in training data.

3. Conflicting Goals Require Trade-offs: Both humans and AI face situations where goals may conflict, necessitating prioritization. This could involve balancing short-term rewards against long-term benefits or integrating diverse objectives from different cultural or environmental contexts.

4. Personality Influences Goal Pursuit: Different personality traits, whether in humans or simulated in AI, affect how goals are approached and prioritized. Traits like creativity, precision, or curiosity lead to distinct strategies and behaviors, impacting decision-making outcomes.

5. Emotions Play a Crucial Role in Human Decision-Making: Emotions act as internal feedback mechanisms that help prioritize goals and initiate actions. While AI does not experience emotions, its reward and punishment systems serve a similar function by providing feedback that shapes behavior.

Exercises to Explore the Concepts

1. Identify Your Explicit and Implicit Goals

• Reflect on your current personal or professional goals. List which of these goals are explicit (consciously defined) and which might be implicit (driving your behavior subconsciously).

• For the implicit goals, try to identify patterns in your actions that suggest these underlying motivations. Reflect on how you might adjust your behavior if you made these implicit goals more explicit.

2. Debate a Conflict Between Two Goals

• Choose a scenario where two goals are in conflict, such as balancing work and personal life, or choosing between a healthy diet and enjoying a treat.

• Write a short dialogue between “System 1” (your automatic, intuitive response) and “System 2” (your deliberate, analytical thought process) as they negotiate which goal to prioritize.

• Reflect on the outcome and what this reveals about your decision-making process.

3. Explore Personality-Driven Goal Pursuit in AI

• Take one of the examples provided in the chapter (e.g., drafting a marketing message, evaluating a new drug) and write out how an AI with a different personality might approach the task.

• For instance, how would a highly creative AI differ from a highly precise AI in addressing the problem? What trade-offs would each personality make, and how would this affect the outcome?

4. Design an Objective Function for a Game Character

• Create an objective function for a game character (e.g., a warrior, explorer, or scientist) in a video game.

• Define the weights for different goals (e.g., immediate action, exploration, and understanding) and describe how varying these weights would change the character’s behavior in different scenarios.

• Reflect on how this process could relate to real-world decision-making in humans.

5. Practice Emotional Awareness in Decision-Making

• Over the next week, observe your emotional responses in various decision-making situations. Note when emotions seem to be influencing your choices, such as fear prompting avoidance or excitement motivating action.

• Write a brief reflection each day about how your emotions guided your decisions, and consider whether any conscious overrides were necessary.

• Afterward, assess how these emotional influences align with your explicit and implicit goals.

References and Further Reading

1. “Thinking, Fast and Slow” by Daniel Kahneman (2011) – Essential for understanding the dual-system theory of cognition, which is central to the discussion of decision-making processes in humans and AI.

2. “Reinforcement Learning: An Introduction” by Richard S. Sutton and Andrew G. Barto (2018) – Provides a foundational understanding of reinforcement learning, crucial for exploring AI goal-setting and adaptation mechanisms.

3. “The Selfish Gene” by Richard Dawkins (1976) – Offers insights into biological goals and evolution, connecting the discussion of natural goals and motivations with AI.

4. “Emotional Intelligence” by Daniel Goleman (1995) – Highlights the role of emotions in decision-making, aligning with the book’s emphasis on the interplay between rational and emotional factors in humans and AI.

So Far…

We began by examining intelligence as a dynamic capability in both humans and AI, noting how it has evolved through increasingly complex systems to solve novel problems. We observed that our cognitive processes and actions can happen in automatic, non conscious way, and that we are moved by goals, which themselves are partly explicit, partly implicit and that a similar pattern can also be found in Artificial Intelligence. We introduced the concepts of consciousness and emotions and we discussed if they may be a required or desirable feature for advanced AIs.

Looking Ahead…

In the next chapter, we will dive into the concept of free will, addressing whether humans truly possess the freedom to choose or if our decisions are determined by genetics, environment, and past experiences. We will explore how AI’s decision-making can be seen as a reflection of deterministic processes and contrast it with the human experience of choice. This discussion will lay the groundwork for understanding the nature of autonomy and responsibility in both human and artificial agents.

Hi there, just became alert to your blog through Google, and found that it’s truly informative. I’m going to watch out for brussels. I will appreciate if you continue this in future. Many people will be benefited from your writing. Cheers!