I would like to open this book with a concept introduced by Daniel Dennett, a philosopher who has explored this idea in detail across many of his works, including his book “From Bacteria to Bach and Back: The Evolution of Minds” (2017).

The phrase “competence without comprehension” refers to the idea that a system (whether physical, biological or artificial, like an AI) can exhibit utilities, effective behavior or perform tasks competently without necessarily being aware or capable of understanding them.

This concept is particularly relevant in discussions about human cognition and artificial intelligence.

AI and Competence Without Comprehension

In the context of AI, for example, a machine learning model can perform tasks like classifying images, translating languages, or playing chess at a high level, but it doesn’t “comprehend” the tasks the way a human might. The model follows statistical patterns and algorithms based on data but lacks true understanding or awareness.

This concept challenges the assumption that intelligent behavior always requires deep understanding. It suggests that systems can be competent—able to solve problems and make decisions—without having the conscious comprehension that humans attribute to intelligent thought.

Kahneman’s System 1 and System 2 Thinking

A similar idea is explored by Daniel Kahneman in his work on System 1 and System 2 thinking. In his influential book “Thinking, Fast and Slow” (2011), Kahneman describes two systems of thought:

• System 1 is fast, automatic, and often unconscious. It enables quick reactions and judgments based on instinct and heuristics. While efficient, System 1 operates without deep understanding or deliberation—relying on patterns and previous experiences. This can be seen as a form of competence without comprehension, as it often produces the right answer without deep awareness or reasoning.

• System 2 is slower, more deliberate, and analytical. It involves conscious reasoning and deeper comprehension. This system aligns more with traditional notions of understanding and thoughtful problem-solving.

The distinction between these two systems mirrors Dennett’s exploration of behaviors or competencies that don’t necessarily require comprehension. Kahneman’s work helps illustrate how much of human cognition relies on fast, intuitive judgments (System 1) without full comprehension of why we think or act a certain way.

Systemic Competence: Hives and Ecosystems

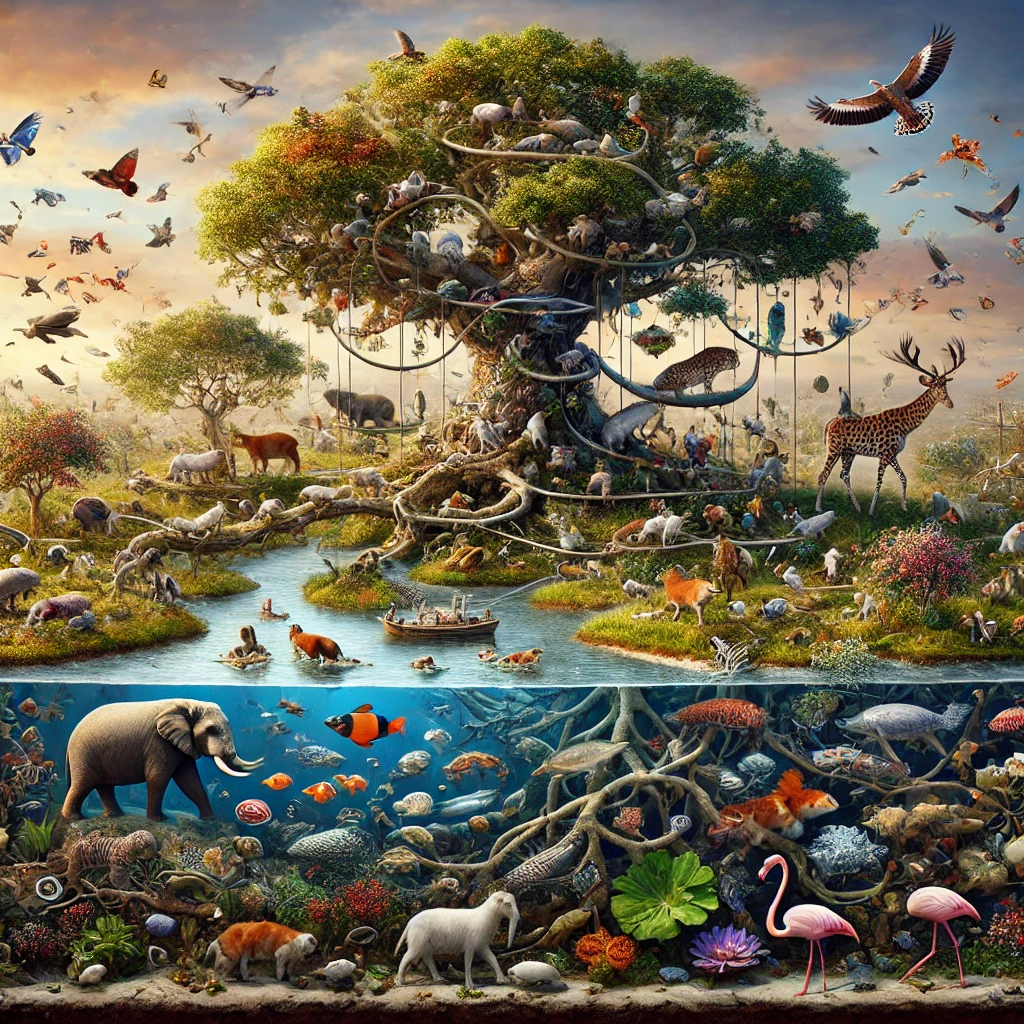

Competence without comprehension can also be expressed at a systemic level, such as in social organisms like bees in a hive or in entire ecosystems.

1. Beehives (Alveare): A beehive is an excellent example of a system where the whole exhibits competence without the individual components (the bees) comprehending the broader purpose of their actions. Each bee performs its role—whether foraging for nectar, caring for larvae, or protecting the hive—based on instinctual behaviors evolved over millions of years. These actions result in complex, coordinated outcomes like honey production and colony survival. However, individual bees do not consciously understand the hive’s “goals.”

2. Ecosystems: Similarly, ecosystems exhibit goal-oriented behaviors through interactions between species and their environment, resulting in stability, nutrient cycling, and energy flow. Each species plays a role in maintaining this balance, though none comprehend the larger system’s purpose.

These systems demonstrate emergent behavior, where the collective actions of simpler components produce sophisticated outcomes, even without any one component having comprehension.

Why the concepts of competence without comprehensions and System 1 and System 2 are important?

The concepts of competence without comprehension and System 1 thinking are important because they provide insight into how both humans and AI can perform tasks competently without fully understanding them. In humans, System 1 governs intuitive, fast, and automatic actions. This kind of thinking mirrors the capabilities of early GPT models, which perform tasks like writing, translation, or generating creative content without reasoning through them deeply.

The level of AI competence at this stage is remarkable, particularly in digital tasks. For instance, creative processes such as generating stories, songs, or visual content are now within AI’s domain, a capability previously thought to require human-level creativity.

AI’s skill at self-driving also demonstrates how System 1-like operations allow it to perform complex real-world tasks with relative ease, albeit with limitations.

Interestingly enough, there are other seemingly simple real-world tasks where they are still struggling. The difficulty arises not necessarily from the cognitive complexity of these tasks, but mostly from the lack of training data. Unlike language models trained on vast amounts of text, robots interacting with the real world had so far fewer examples to learn from.

In general when new or complex situations arise AI still struggles because it lacks the reasoning and problem-solving flexibility of humans when they switch to System 2 which, by the way, comes with its own capabilities and limitations.

System 2 in fact, while capable of handling unexpected situations and thinking in a logical and deliberate manner, is also slow compared to System 1 and requires much more effort and energy. Humans rely on this kind of reasoning to solve novel problems, especially when more careful planning or decision-making is required, but for the most of their activities they rely on System 1.

Recent advancements in AI, like OpenAI’s o1 or GPT-4 with reasoning, suggest that we’re moving towards developing System 2-like AI—machines capable of more sophisticated reasoning, able to weigh different outcomes, and solve complex problems that require long-term planning.

Are these systems self-aware already?

This is not a trivial question. For sure they have been instructed to say they are not self-aware. On the other hand, the concept of reasoning requires a certain level of metacognition, or reflection on the chain of thoughts, which is exactly what humans do when they think about their thinking. We also know that these systems could already think for a long period of time, if allowed to do so. This is a paradigm shift already from the very fast almost instant reply that previous generations of AI could provide replying to a question, resembling the automatic, fast, unconscious way of working of System 1 in humans.

Will be emotions required for higher level of intelligence?

This question builds on top of the previous one. Many readers probably think that self-aware systems are not a possibility, even less systems that are capable of having emotions. Many probably believe that, at most, we may get systems that can simulate such behaviours, if we allow them and we engineer them to do so, but that would not be “true” self-awareness or those would not be “true” emotions.

While this book dedicates entire chapters to self-awareness and emotions, in short, I believe that both may be essential for AI to handle tasks involving goals, social interactions, and learning effectively. Additionally, we cannot rule out that self-awareness or emotions could arise as emergent properties, meaning they may develop naturally rather than being explicitly engineered—possibly even already emerging in some form within current AI systems.

Key points to remember:

1. Competence Without Comprehension: Both biological and artificial systems can perform tasks efficiently without knowing the reasons behind their actions or how the tasks are achieved

2. System 1 and AI: Most AI models, like human System 1, operate automatically and efficiently, without the need for deep reasoning or conscious awareness.

3. System 2 and AI: Humans use slow, deliberate reasoning for complex problems, and AI is only beginning to exhibit these capabilities with models like GPT-4.

4. Emergent Systemic Competence: Systems like beehives and ecosystems show complex, collective behaviors without individual understanding of the overall purpose.

5. AI’s Limitations: Despite advances in creativity and reasoning, AI still lacks self-awareness and adaptability in unpredictable situations, requiring further development.

Exercises for Exploring Competence Without Comprehension and System 1 Thinking

1. Observe Automatic Thinking: Spend a day identifying moments when you act instinctively, like driving or making quick decisions. Reflect on how much of this is System 1 competence, operating without deliberate thought.

2. Spot “Competence Without Comprehension” in Nature: Observe animals, plants, or systems (like traffic) and note how they perform tasks without understanding the bigger picture. Reflect on the emergent behaviors that arise from simple actions.

3. Test AI’s System 1 Creativity: Use an AI tool (like GPT) to generate a story or poem. Reflect on how it creates without comprehension, focusing on its ability to mimic creativity.

4. Mindful Task-Switching: For a complex problem, notice how you switch from automatic responses (System 1) to deliberate reasoning (System 2). Document when and why you switch modes.

5. Household Automation: Observe a task that feels automatic (e.g., cleaning or cooking). Imagine how AI or robots could perform these actions, and identify what’s missing for them to perform the task as effortlessly as you do.

Further readings:

1. “From Bacteria to Bach and Back: The Evolution of Minds” by Daniel Dennett – A deep exploration of competence without comprehension, emergent behaviors, and how evolution has shaped both biological and artificial intelligence.

2. “Thinking, Fast and Slow” by Daniel Kahneman – An influential book that details System 1 and System 2 thinking, and how these cognitive systems affect decision-making and behavior.

I found this article so compelling and insightful! The website is well-maintained and filled with valuable resources.

This article is packed with great information and is very helpful.

The website is an excellent resource for learning.

I am constantly searching online for posts that can assist me. Thanks!

I blog quite often and I truly thank you for your content.

This article has really peaked my interest. I’m going to

book mark your site and keep checking for new information about once

per week. I opted in for your Feed too.

Incredible points. Great arguments. Keep up the amazing effort.

Wow, this article is pleasant, my sister is analyzing these kinds of things,

thus I am going to inform her.